Malte Prinzler

Hi there,

I am a Ph.D. student with the Max Planck ETH Center for Learning Systems (CLS), supervised by Justus Thies (TU Darmstadt), Siyu Tang (ETH), and Otmar Hilliges (ETH).

My research focuses on reconstructing photo-realistic 3D avatars of the human head with affordable capturing hardware.

Such technology will be a crucial step towards next-gen telecommunication and entertainment, e.g., in immersive 3D video-conferencing and VR gaming.

Before my Ph.D., I obtained a Master's Degree in Physics from Heidelberg University and studied at the Manning College of Information and Computer Sciences at the University of Massachusetts, Amherst, USA under the supervision of Prof. Erik G. Learned-Miller.

Further, I worked as a Machine Learning Intern at SAS Institue.

If you'd like to have a chat, feel free to get in touch:

PROJECTS

Joker: Conditional 3D Head Synthesis with Extreme Facial Expressions

Malte Prinzler, Egor Zakharov, Vanessa Slyarova, Berna Kabadayi, Justus Thies

3DV 2025

We introduce Joker, a new method for the conditional synthesis of 3D human heads with extreme expressions. Given a single reference image of a person, we synthesize a volumetric human head with the reference’s identity and a new expression. We offer control over the expression via a 3D morphable model (3DMM) and textual inputs. This multi-modal conditioning signal is essential since 3DMMs alone fail to define subtle emotional changes and extreme expressions, including those involving the mouth cavity and tongue articulation. Our method is built upon a 2D diffusion-based prior that generalizes well to out-of-domain samples, such as sculptures, heavy makeup, and paintings while achieving high levels of expressiveness. To improve view consistency, we propose a new 3D distillation technique that converts predictions of our 2D prior into a neural radiance field (NeRF). Both the 2D prior and our distillation technique produce state-of-the-art results, which are confirmed by our extensive evaluations. Also, to the best of our knowledge, our method is the first to achieve view-consistent extreme tongue articulation.

DINER: Depth-aware Image-based NEural Radiance fields

Malte Prinzler, Otmar Hilliges, Justus Thies

CVPR 2023

We present Depth-aware Image-based NEural Radiance fields (DINER). Given a sparse set of RGB input views, we predict depth and feature maps to guide the reconstruction of a volumetric scene representation that allows us to render 3D objects under novel views. Specifically, we propose novel techniques to incorporate depth information into feature fusion and efficient scene sampling. In comparison to the previous state of the art, DINER achieves higher synthesis quality and can process input views with greater disparity. This allows us to capture scenes more completely without changing capturing hardware requirements and ultimately enables larger viewpoint changes during novel view synthesis. We evaluate our method by synthesizing novel views, both for human heads and for general objects, and observe significantly improved qualitative results and increased perceptual metrics compared to the previous state of the art. The code will be made publicly available for research purposes.

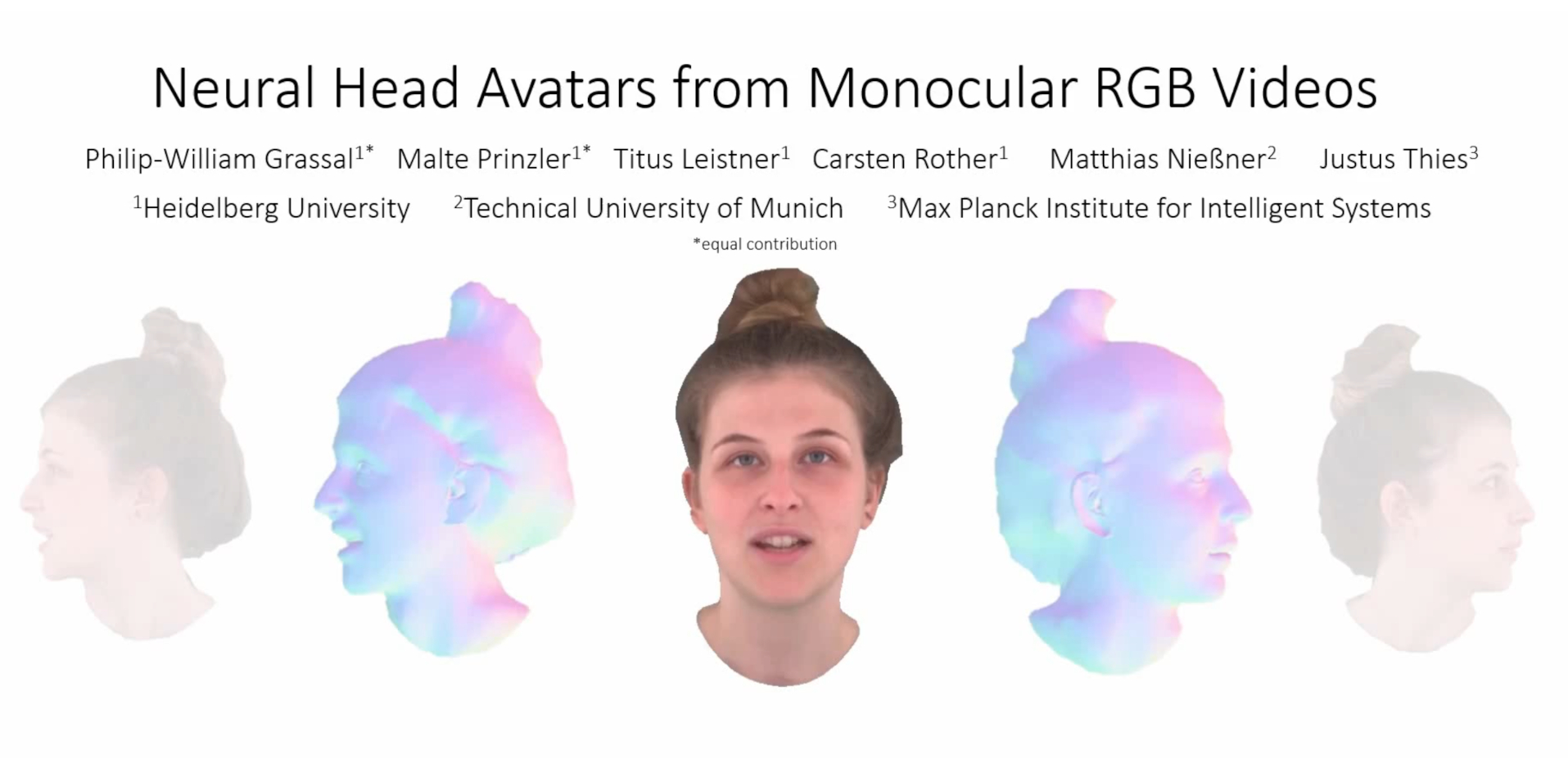

Neural Head Avatars from Monocular RGB Videos

Philip-William Grassal*, Malte Prinzler*, Titus Leistner, Carsten Rother, Matthias Nießner, Justus Thies (*equal contribution)

CVPR 2022

We present Neural Head Avatars, a novel neural representation that explicitly models the surface geometry and appearance of an animatable human avatar that can be used for teleconferencing in AR/VR or other applications in the movie or games industry that rely on a digital human. Our representation can be learned from a monocular RGB portrait video that features a range of different expressions and views. Specifically, we propose a hybrid representation consisting of a morphable model for the coarse shape and expressions of the face, and two feed-forward networks, predicting vertex offsets of the underlying mesh as well as a view- and expression-dependent texture. We demonstrate that this representation is able to accurately extrapolate to unseen poses and view points, and generates natural expressions while providing sharp texture details. Compared to previous works on head avatars, our method provides a disentangled shape and appearance model of the complete human head (including hair) that is compatible with the standard graphics pipeline. Moreover, it quantitatively and qualitatively outperforms current state of the art in terms of reconstruction quality and novel-view synthesis.

Visual Fashion Attribute Prediction

Internship project on vision-based regression of descriptive features for apparel products. Given an image of a garment, a 2D convolutional network extracts scores for a predefined set of attributes. This allows for attribute-based product similarity matching and recommendation applications. This project was part of Kaggle iMaterialist Challenge (Fashion) at FCVC5. The code is publicly available through GitHub.

EXPERIENCE & EDUCATION

Presenter

Computer Vision and Pattern Recognition Conference (CVPR)

Jun 2023

Presented our paper DINER: Depth-aware Neural Radiance Fields.

Ph.D. Student

Max Planck ETH Center for Learning Systems (CLS)

Since Mar 2022

Co-supervised by Justus Thies (TU Darmstadt), Siyu Tang (ETH), and Otmar Hilliges (ETH), my research focuses on reconstructing photo-realistic 3D avatars of the human head based on affordable capturing hardware.

Speaker

Rank Prize Symposium on Neural Rendering in Computer Vision

Aug 2022

Presented and discussed cutting-edge research on Neural Rendering in Computer Vision together with top-of-the-field researchers, among them Ben Mildenhall, Vladlen Koltun, Andrea Vedaldi, Vincent Sitzmann, Siyu Tang, Matthias Nießner, Justus Thies, and Jamie Shotton.

Presenter

Computer Vision and Pattern Recognition Conference (CVPR)

Jun 2022

Presented the paper Neural Head Avatars from Monocular RGB Videos.

Exchange Research Student

University of Massachusetts, Amherst, USA

Sep 2019 - May 2020

Graduate Student Exchange. I attended lectures on Natural Language Processing, Reinforcement Learning, and Quantum Computing. Further, I conducted research under the supervision of Erik G. Learned-Miller.